Over the past few years, 3D printing has become a frequent subject of discussion in the library world. Library makerspaces have proliferated. Patrons have printed innovative and essential objects, including prosthetic hands. Now that 3D printers are becoming a common feature in public libraries, we’re beginning to see discussion of potential legal dangers, and the formulation of rules to keep this new technology from creating problems. Debate is ongoing about whether 3D printers represent a major source of innovation or a passing fad. We at Unbound even weighed in on the subject last year. Whether or not we’ll all have 3D printers in our homes one day, it’s undeniable that they’ve made an impact. Today we’ll be taking a look at two new technologies that synchronize with 3D printers to expand the scope of what they can produce. One of them is currently finding its way into libraries across the country, and the other is still a laboratory prototype.

Over the past few years, 3D printing has become a frequent subject of discussion in the library world. Library makerspaces have proliferated. Patrons have printed innovative and essential objects, including prosthetic hands. Now that 3D printers are becoming a common feature in public libraries, we’re beginning to see discussion of potential legal dangers, and the formulation of rules to keep this new technology from creating problems. Debate is ongoing about whether 3D printers represent a major source of innovation or a passing fad. We at Unbound even weighed in on the subject last year. Whether or not we’ll all have 3D printers in our homes one day, it’s undeniable that they’ve made an impact. Today we’ll be taking a look at two new technologies that synchronize with 3D printers to expand the scope of what they can produce. One of them is currently finding its way into libraries across the country, and the other is still a laboratory prototype.

3D Scanners are tools for turning physical objects into digital models. Just as the 3D printer took manufacturing from a factory scale into the home, 3D scanners make three-dimensional imaging possible without a large, expensive device like an MRI scanner. Indeed, many 3D Scanners on the market today are produced by the same companies that produce 3D Printers. The devices make sense together—one could scan an object and then use the resulting model to print a replica of it. But how accurate will that replica be?

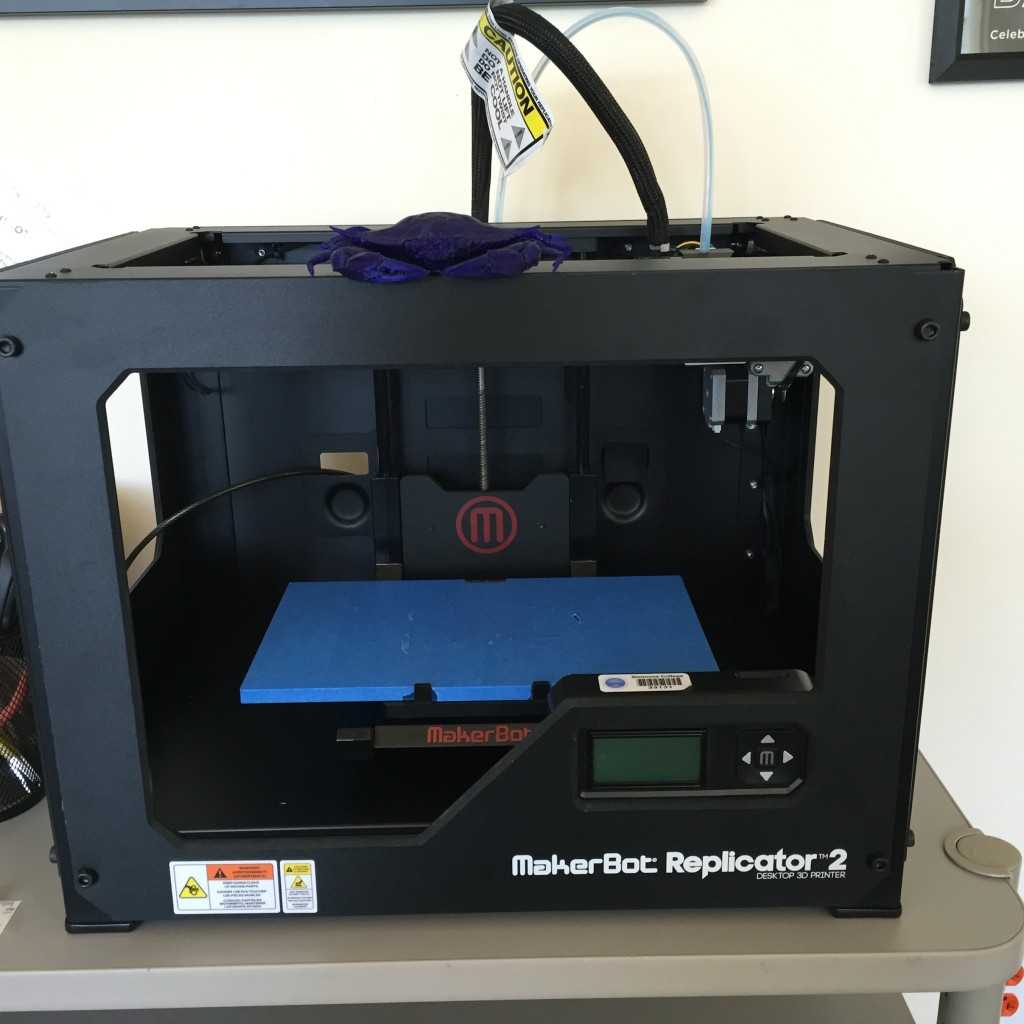

Here at SLIS, our tech lab owns a 3D scanner called the MakerBot Digitizer. To learn more about its efficacy, I consulted with Linnea Johnson (Manager of Technology) and Gabriel Sanna (SLIS student). The Digitizer is surprisingly lightweight and streamlined. I was expecting a heavy, brutalist kind of object, but instead I was presented with something resembling a small, abstracted replica of the Enterprise. We tested out the device by scanning Gabriel’s sunglasses.

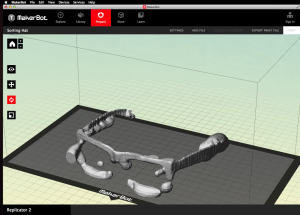

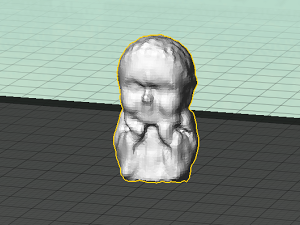

As you can see, the scanned glasses were not entirely accurate. The general shape was mostly preserved, but the details were rough. In the area around the lenses, however, things got a bit strange. The scanner works by tracing the object with two lasers as it rotates on a built-in turntable. The glare on the lenses disrupted this process and made it difficult for the system to get an accurate read on them. MakerBot recommends coating reflective objects in a thin layer of baby powder to remedy this issue, but we elected not to fill every crevasse in Gabriel’s glasses with fragrant powder. Next we scanned a small statue from Linnea’s office, and this time we printed the result.

Absent the glare, this time the scanner captured the overall shape of the object much more accurately. The finer details were captured at a pretty low resolution, but you can still tell what you’re looking at. When we printed the object, it lost additional resolution and came out looking pretty vague (and orange). Admittedly, this process represents the minimal-effort use case for the scanner. Canny users of the scanner could take the model it produces and correct it manually before printing it. This would result in a much higher-quality end result.

Still, it seems clear that prosumer 3D scanners still have a ways to go. A trained and dedicated user could certainly save a good deal of 3D modeling time, as long as they were willing to put some effort into correcting the original scan. However, the device isn’t magic—a novice user could not expect an immediately accurate replica of their object in most circumstances. We are still in the fairly early stages of this technology, and I expect it to improve dramatically in the coming years.

If the 3D scanner is still young, this next technology hasn’t even been born yet. At this year’s SIGGRAPH (ACM Special Interest Group on GRAPHics and Interactive Techniques) 2015 conference, a research group from Zheijiang University will be presenting a new process they call “computational hydrographic printing“.

Hydrographic printing has been around for some time. It’s a method for printing color and patterns onto objects. Basically, you produce a colored plastic film, rest it on top of a pool of water, spray the film with chemicals that activate bonding agents within the plastic, and then dunk an object into the water, allowing the film to wrap around the object and coat it with color. Hydrographic printing is good at applying solid colors and patterns, but it’s not so great when you need accuracy. Dipping an object by hand is an imprecise art. It’s difficult to keep a steady hand during the entire process, and nearly impossible to accommodate for the way the film stretches and warps as it bonds to the object. You’d be fine printing a brick wall pattern onto a mask, but trying to print a face would work out maybe once in a hundred times.

That’s where computational hydrographic printing comes in. The Zheijiang University researchers have developed a new device and process that can print hydrographically with an accuracy and consistency that was previously impossible. Their technique couples cheap, off-the-shelf components (binder clips, an Xbox Kinect, a linear DC motor) with advanced physics simulation software that can predict how the film will stretch when an object is immersed.

By simulating the dunk in advance, the software is able to generate a design that is distorted just enough to compensate for the stretching of the film when placed onto the object. This design can be printed onto the film using a common inkjet printer. Their rig incorporates an aluminum arm that grips the object, as well as a 3D visual feedback system that ensures that the arm remains perfectly steady and maintains its position, for a perfectly executed dunk. The result is so consistent that a single object can be immersed multiple times, to print complex designs onto all sides of it, as seen in the above gif.

If this technology continues to develop, it could easily sit alongside a 3D printer in a library’s makerspace. 3D printers already use digital models, and that same model could be fed directly into a hydrographic printer. After 3D printing an object, all you would have to do is send that 3D model and a 2D design into the hydrographic printer, and you could paint your object with excellent accuracy.

It isn’t hard to imagine a future library makerspace where you could use a 3D scanner to model an object, use a 3D printer to create a physical copy of it, and then use a hydrographic printer to paint it. It wouldn’t quite be a Star Trek replicator, but it wouldn’t be too shabby either.

(Post by Derek Murphy)

Great content! Congratulations on the initiative to share something of such quality. Congratulations!

I have read your blog its really good.

Very Nice Post. I am very happy to see this post.

website – https://www.dadsprinting.com/

Nice Blog..Thanks for Sharing..Best leasing tool

Thank you for sharing this information. This article is really helpful for me. I love to style myself self and custom-designed t-shirts are a great way for this.

Thanks for sharing very useful information with us. Keep up the good writing..https://www.naturallycrownedhairstudio.com/

What’s stopping you from buying your favorite things? Your budget and the high prices? Then don’t worry because Mydeal Coupon Code is here for you. With the discount codes and coupons available, you can get a good look at your bills. Don’t wait any longer either and get your most coveted item for less now.

3D printing peripherals, both real and speculative, include tools and devices that enhance the functionality of 3D printers, such as filament extruders, automated part removers, and speculative innovations like self-healing print beds or AI-driven material optimization systems. Hi my name is Aled David currently employed at TheAffilo.com, an Affiliate Network.

“3D printing peripherals are revolutionizing various industries, offering both real and speculative applications. For instance, innovative 3D-printed components are being explored for household improvements like hot water system replacement melbourne, providing customized and efficient solutions. This technology could redefine how we approach repairs and upgrades in plumbing and beyond.”